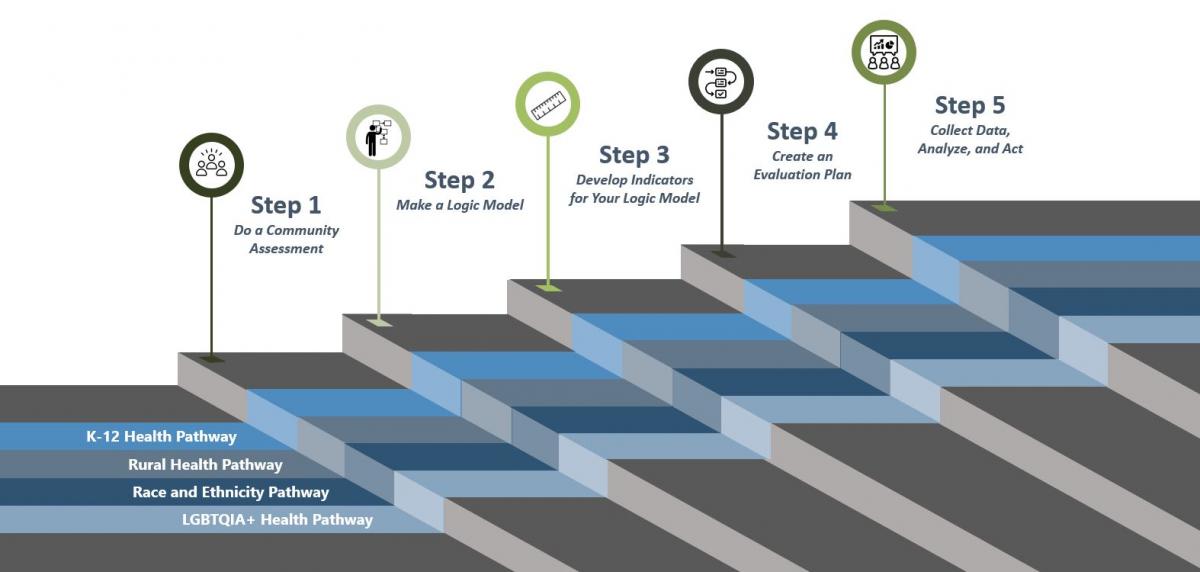

Are you writing a proposal or working on a project? Incorporating an evaluation into project design and implementation is essential for understanding how well the project achieves its goals. The Evaluation Planning and Pathway resources presented here provide guidance and tools to effectively design and carry out an evaluation of your project.

Evaluation design is broken down in to 5 steps, which you can explore in detail using NEO’s Evaluation Booklets. Basic steps and worksheets are presented in the tabs above. While you are developing your evaluation plan, it is important to consider the context of your program. The context can change how your evaluation is designed and implemented, because different settings and populations have different needs and abilities to participate in evaluations. Considering this, the 5 steps are applied across 4 common populations (K-12 Health, Rural Health, Race & Ethnicity, and LGBTQIA+ Health) in pathways.

Each pathway presents special considerations, resources, and tools for carrying out an evaluation of a project working with that population. You can explore the pathways embedded in the evaluation steps below or explore each pathway on its own using the menu buttons above. Each pathway includes a real-life example of a program creating an evaluation plan that walks step-by-step through the evaluation planning process. At the conclusion of each pathway is an example evaluation plan created using the 5 steps to evaluation.

Working on a project with a small award (less than $10,000)? Use the Small Awards Evaluation Toolkit and Evaluation Worksheets to assist you in determining key components for your evaluation!

5 Steps to an Evaluation

The first step in designing your evaluation is a community assessment. A community assessment helps you determine the health information needs of the community, the community resources that would support your project, and information to guide you in your choice and design of outreach strategies. The community assessment phase is completed in three segments:

- Get organized

- Gather information

- Assemble, interpret, and act on your findings

Get Organized

- This phase includes outreach, background research, networking, reflecting on the evaluation and program goals, and formulating evaluation questions.

- Conduct a stakeholder analysis to identify individuals or groups who are particularly proactive or involved in the community. Find out why they are involved, what is going well in their community, and what they would like to see improved.

- Network and identify a team of advisors

- Conduct a literature review

- Use the Positive Deviance (PD) approach to identify early adopters. More information on Positive Deviance

- Take an inventory of what you already know and what you don’t know. More information on SWOT Analysis

- Develop community assessment questions

Gather Information

- This phase includes gathering data from different external and internal sources to inform the development of your program and evaluation plan.

- Collect data about the community

- Secondary sources (some suggestions)

- Primary sources (interviews, focus groups, questionnaires, observations, site visits, online discussions)

Assemble, Interpret, and Act on your findings

- This phase includes processing the information gathered into understandable takeaways that can be used for the program and the evaluation.

- Interpret findings and make project decisions

The second step in designing your evaluation is to make a logic model. The logic model is a helpful tool for planning a program, implementing a program, monitoring the progress of a program, and evaluating the success of a program.

Make a Logic Model

- Consider how the program's logic model will assist in determining what is, or is not, working in the program's design to achieve the desired results.

- Be open to new and alternative patterns in your logic model. Have stakeholders and community members review your logic model, paying particular care to how change occurs across the logic model. Listen to voices who can say whether the strategies are beneficial, and whether strategies could be successful.

- How to create a logic model

- Outcomes are results or benefits of your project – Why you are doing the project

- Short-term outcomes such as changes in knowledge

- Intermediate outcomes such as changes in behavior

- Long-term outcomes such as changes in individuals’ health or medical access, social conditions, or population health

- More information on outcomes

- Blank Logic Model Template Worksheet: Logic Models

- Outcomes are results or benefits of your project – Why you are doing the project

Tearless Logic Model

- To facilitate the creation of the logic model, community-based organizations can consider using the Tearless Logic Model process.

- The Tearless Logic Model uses a series of questions to assist non-evaluators in completing the components of the logic model. Questions used in the Tearless Logic Model

Knowledge, Attitudes, & Behaviors

- Programming should consider improvement in the knowledge-attitude-behavior continuum among program participants as a measure of success. The process of influencing health behavior through information, followed by attitude changes and subsequent behavior change, should be documented in the logic model.

- Focusing on behavior change is more likely to require Institutional Review Board (IRB) approval.

- Knowledge Acquisition:

- What is the program trying to teach or show program participants?

- What understanding would a program participant gain over the course of the program? Often knowledge acquisition occurs as a short-term outcome of the program.

- Be sure to examine not only what is learned, but where, when, and how program participants will learn it.

- Attitude Change:

- What mindsets or beliefs is the program seeking to build or change? Be sure to the consider cultural differences in attitudes in the community you are working with.

- Are there misconceptions about the topic, and does that belief change after the program has been implemented?

- To what extent do participants agree with statements related to the new material presented?

- Behavior Change:

- After some time has passed from implementation of the program, are the actions of participants different than what they presented before the program began?

- Are the new behaviors in alignment with the expectations of the program?

- Note that most NNLM projects focus on the dissemination of health information/health education and often do not take place over a long enough period of time to observe behavior change.

- As such, examining/measuring behavior change may be out of the scope of the NNLM-funded project unless the projects runs for multiple cycles over an extended period of time.

The third step in designing your evaluation is to select measurable indicators for outcomes in your logic model. Indicators are observable signs of your outcomes and will help you measure your achievement. Identify indicators that align to the outcomes in your logic model. Tracking these indicators over time will help you reflect upon your progress and help you collect and show results.

Measurable Indicators

- Consider whether your outcomes are short-term, intermediate, or long-term

- Most NNLM projects are of short duration and should focus only on short-term or intermediate outcomes.

- It may be necessary to use more than one indicator to cover all elements of a single outcome.

- For your outcomes (mostly short-term and intermediate), identify:

- Indicators (observable signs of the outcome)

- Target criteria (level that must be attained to determine success)

- Time frame (the point in time when the threshold for success will be achieved

- How to develop measurable indicators

- Outcome Indicator Blank Worksheet (top section) Worksheet: Measurable Indicators

Demographic Data

- Collect data on demographics as part of your survey process. It is important to understand your participants' backgrounds and how that may affect their engagement with your program.

- During analysis, compare differences in backgrounds against outcome results. If there are gaps identified in reaching certain populations, consider adjustments to program implementation to serve all participants equitably.

- More information on collecting demographic data

The fourth step in designing your evaluation is to create the evaluation plan. An evaluation plan describes how the project will be evaluated. It includes the description of the purpose of the evaluation, evaluation questions, timetable/work plan, as well as a description of the data collection tools to be used, an analysis framework, and a section articulating how data will be used and disseminated. An evaluation plan is often a key component of a grant proposal but will also serve as your guide for implementing the evaluation. This phase is completed in three segments:

- Defining evaluation questions

- Developing the evaluation design

- Conducting an ethical review

Defining Evaluation Questions

Evaluation questions help define what to measure and provide clarity and direction to the project.

Process evaluations and outcome evaluations are two common types of evaluations with different purposes. Consider which makes the most sense for you and the objectives of your evaluation (you DO NOT need to do both). Then explore the resources to inform your evaluation plan.

Process Questions - Are you doing what you said you'd do?

- Process evaluation questions address program operations – the who, what, when, and how many related to program inputs, activities, and outputs.

- Process Evaluation Worksheet Book 2 Worksheet: Process Evaluation

- Sample Process Evaluation Questions and Evaluation Methods

The CDC recommends the following process to guide development of process evaluation questions that reflect the diversity of stakeholder perspectives and the program's most important information needs:

Gather your stakeholders The engagement of stakeholders involved in the planning of the program may vary by context. It may be best to meet together to develop the questions, or it may be preferred that the person(s) in charge of the evaluation plan develop a list of questions and solicit feedback before finalizing the list. Review supporting materials This may include the program design documents, logic model, work plan, and/or community-level data available through external sources. Brainstorm evaluation questions Start with a specific program activity but be sure to consider the full program. Consider goals and objectives from the strategic plan and inputs, activities, and outputs from the program logic model to create process evaluation questions. Sort evaluation questions into categories that are relevant to all stakeholders It is difficult to limit evaluation questions, but few programs have the time or resources to answer all questions! Prioritize those that are most useful for all stakeholders. Decide which evaluation questions to answer Prioritize questions that:

- Are important to program staff and stakeholders

- Address the most important program needs

- Reflect program goals and objectives outlined in any program strategy or design documents

- Can be answered using the time and resources available to program staff, including staff expertise

- Provide relevant information for making program improvements

Verify questions are linked to the program Once questions are agreed upon, revisit your strategic plan, work plan, and/or logic model to ensure the questions are linked to these program documents. Determine how to collect the data required This includes determining who will be responsible for collecting and analyzing data, when the data can be collected, and from who the data will be collected.

Outcome Questions - Are you accomplishing the WHY of what you wanted to do?

- Outcome evaluation questions address the changes or impact seen as a result of program implementation.

- Use the same CDC process for developing process evaluation questions to develop outcome evaluation questions.

- Consider whether the impact assessed relates to short-term, intermediate, or long-term outcomes outlined in your logic model.

- Outcome Objective Blank Worksheet (bottom section) Book 2 Worksheet: Outcome Objectives

- Data Sources: Examples of Data Sources

- Evaluation Method: Examples of Evaluation Methods

- Data Collection Timing: When you collect the data (i.e. immediately after training, at the end of the project, etc.)

Evaluation Design

- Evaluation design influences the validity of the results.

- Most NNLM grant projects will use a non-experimental or quasi-experimental design.

- Quasi-experimental evaluations include surveys of a comparison group - individuals not participating in the program but with similar characteristics to the participants - to isolate the impact of the program from other external factors that may change attitudes or behaviors.

- Non-experimental evaluations only survey program participants.

- If comparison groups are part of your evaluation design, use a 'do no harm' approach that makes the program available to those in the comparison group after the evaluation period has ended.

- More information on Evaluation Design

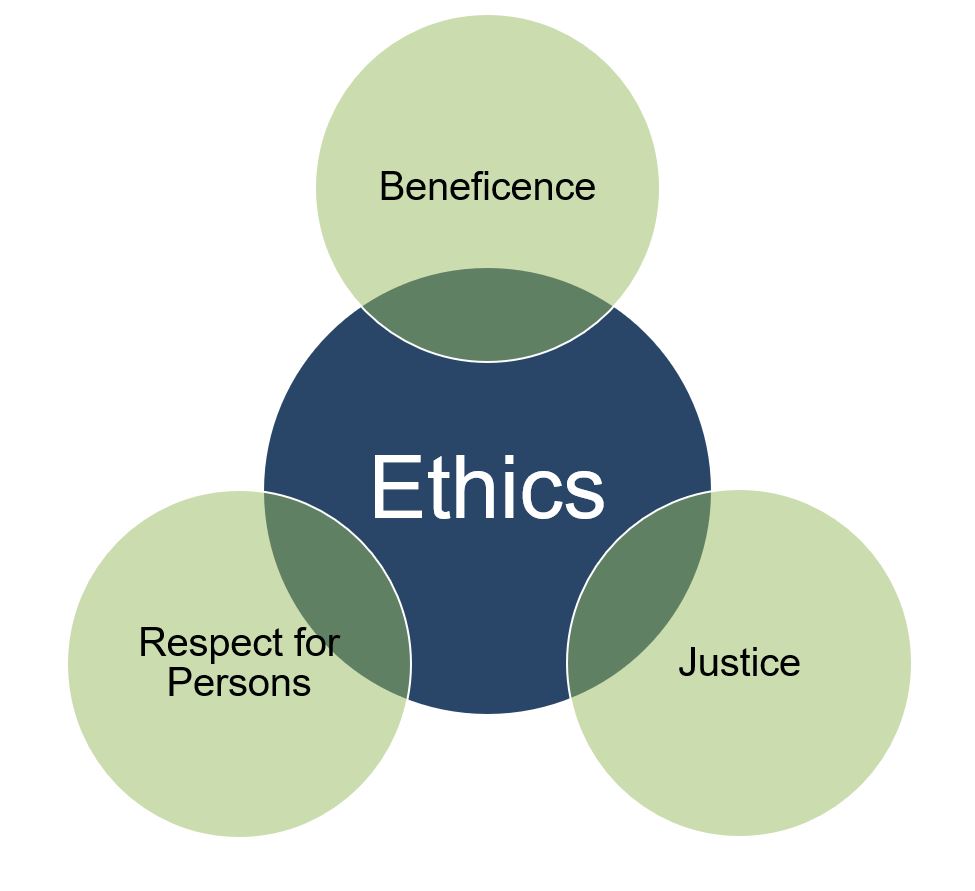

Ethical Considerations

Consider the Belmont Report principles to examine ethical considerations:

- Respect for Persons: Protecting the autonomy of all people and treating them with courtesy and respect and allowing for informed consent

- Beneficence: The philosophy of "Do no harm" while maximizing benefits for the research project and minimizing risks to the research subjects

- Justice: Ensuring reasonable, non-exploitative, and well-considered procedures are administered fairly — the fair distribution of costs and benefits to potential research participants — and equally

Trauma-Informed Evaluation

- Asking someone about trauma is asking that person to recall potentially difficult events from their past.

- If absolutely necessary for the evaluation to ask questions about potentially traumatic events, incorporate a trauma-informed approach to collect data in a sensitive way.

- Amherst H. Wilder Foundation Fact Sheet on Trauma-Informed Evaluation

- More information on Trauma-Informed Evaluation

Ethical Review

- The Institutional Review Board (IRB) is an administrative body established to protect the rights and welfare of human research subjects recruited to participate in research activities.

- The IRB is charged with the responsibility of reviewing all research, prior to its initiation (whether funded or not), involving human participants.

- The IRB is concerned with protecting the welfare, rights, and privacy of human subjects.

- The IRB has authority to approve, disapprove, monitor, and require modifications in all research activities that fall within its jurisdiction as specified by both the federal regulations and institutional policy.

- Click here for more information on the IRB, including contact information for your local IRB

- Depending on the nature of the evaluation, the IRB may exempt a program from approval, but an initial review by the Board is recommended for all programs working with minors.