This pathway complements NNLM’s resources on race and ethnicity by exploring evaluation considerations for programs that seek to improve health outcomes for people with a specific ethnicity or ethnicities.

Because of systemic inequalities present in the United States, typically, people who identify as Black, Indigenous and People of Color will be the focus of these programs.

Working on a project with a small award (less than $10,000)? Use the Small Awards Evaluation Toolkit and Evaluation Worksheets to assist you in determining key components for your evaluation!

While you are developing your evaluation plan, it is important to consider the context of your program. The context can change how your evaluation is designed and implemented because different settings and populations have different needs and abilities to participate in evaluations.

NNLM stands against racism in health. Review the following resources to learn more about health disparities, the experience of people of color, and racism in health:

- Clinical Conversations Training Program

- “Because I See What You Do”: How Microaggressions Undermine the Hope for Authenticity at Work

- Recording of Because I See What You Do": How Microaggressions Undermine the Hope for Authenticity at Work

- Please visit NNLM.gov or contact your local regional medical library for more resources

- Understand how racism can change the context of your evaluation. Racism in the United States has a complex, and often controversial, history. As the Annie E. Casey Foundation states, “the concept of racism is widely thought of as simply personal prejudice, but in fact, it is a complex system of racial hierarchies and inequities."

- NNLM has compiled common microaggressions, organized by themes that can be reinforced or minimized by the methodology and conclusions of your evaluation.

- Take a stance of anti-racism. A stance of anti-racism actively opposes racism, in all forms at all levels. It includes working against racism on a personal level and working against racism on a structural or institutional level.

- In program evaluation, this includes designing evaluations to accurately capture the lives of the program participants, and clearly calling out and communicating racism when it is encountered.

| Internalized Racism | Internalized racism describes the private racial beliefs held by and within individuals. The way we absorb social messages about race and adopt them as personal beliefs, biases and prejudices are all within the realm of internalized racism. For people of color, internalized oppression can involve believing in negative messages about oneself or one’s racial group. For white people, internalized privilege can involve feeling a sense of superiority and entitlement or holding negative beliefs about Black and people of color. |

| Interpersonal Racism | Interpersonal racism is how our private beliefs about race become public when we interact with others, such as microaggressions and tone policing. |

| Institutional Racism | Institutional racism is racial inequity within institutions and systems of power, such as places of employment, government agencies and social services. It can take the form of unfair policies and practices, discriminatory treatment, and inequitable opportunities and outcomes. |

| Structural Racism | Structural racism (or structural racialization) is the racial bias across institutions and society. It describes the cumulative and compounding effects of an array of factors that systematically privilege white people and disadvantage Black and people of color. |

| Systemic Racialization | Systemic racialization or systemic racism describes a dynamic system that produces and replicates racial ideologies, identities, and inequities. Systemic racialization is the well-institutionalized pattern of discrimination that cuts across major political, economic, and social organizations in a society. |

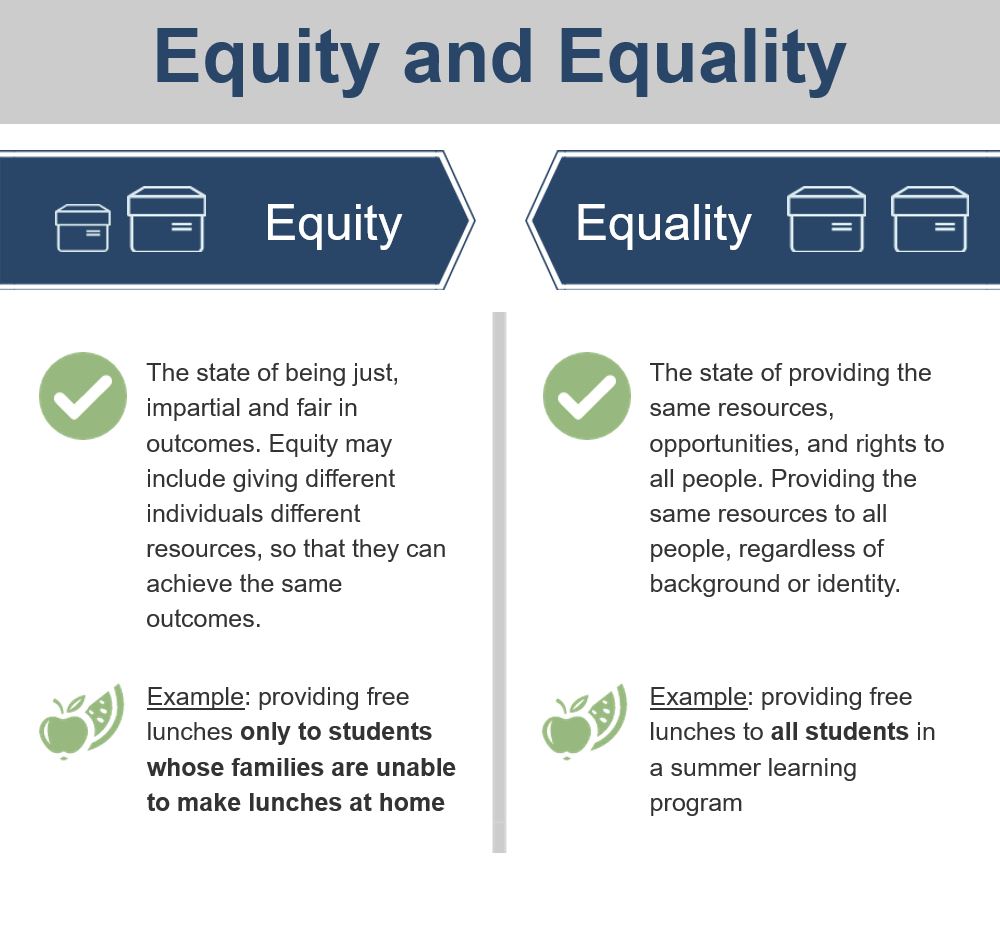

- Clarify the definition of success. Programs (and evaluations) can define activities and outcomes from an equity and/or an equality perspective. It is important to understand the differences between equity and equality, as they are often used interchangeably but are defined by different concepts and have correspondingly different criteria for success.

- Measure multiple dimensions of discrimination. Kimberlé Crenshaw's Intersectionality framework states that people experience multiple, overlapping, and cumulative forms of discrimination, such as racism, sexism, classism, and ableism.

- Crenshaw recognized that programs have failed to address the experiences of Black women by focusing on only one dimension of their identity - either being Black or being female - rather than the intersection of those dimensions.

- These different forms, or dimensions, of oppression intersect in complex ways, so that individuals who identify with multiple dimensions of oppression experience discrimination in different ways.

- This may justify the collection of additional data related to the needs of specific communities within your group of program participants.

- More information on intersectionality

- Consult an intersectionality chart. When thinking through your evaluation, it may be beneficial to consult an intersectionality chart, like the one below, to think through how different groups may experience your program differently, and effective ways of measuring the experiences of all groups.

| Privilege/Society Normative | Oppression/Resistance | |

| White | Racism | Black, People of Color |

| Upper & Upper-Middle Class | Classism | Working Class |

| Male and Masculine, Female and Feminine | Genderism | Non-binary or transgender |

| Male | Sexism | Female |

| European heritage | Eurocentrism | Non-European origin |

| Heterosexual | Heterosexism | Lesbian, Gay, Bisexual, Queer, Asexual++ |

| Wealth | Wealthism | Poor/financially insecure |

| People with no disabilities, mental good health | Ableism | People with disabilities, people with mental illness |

| Credentialed | Educationalism | No or limited education |

| Young | Ageism | Old |

| Attractive | Politics of Appearance | Unattractive |

| Anglophone | Language Bias | English as a second language |

| Light, pale skin | Colorism | Dark Skinned |

| Gentile, Not Jewish | Anti-Semitism | Jewish |

| Fertile | Pro-natalism | Infertile |

- Consider how your unconscious behavior can change how you approach the evaluation. Implicit biases, unconscious associations between groups of people and certain characteristics or behaviors, affect how we view other races, ethnicities, genders, abilities, etc.

- In evaluation implicit biases can change how we perceive the success of outcomes, how we collect and share information, how we form conclusions, and can also inform decision making processes.

- Test for your biases. Project Implicit has a free and publicly available Implicit Association Test that can assist in identifying unconscious biases so they can be more easily recognized and addressed.

Distrust of Research

- Know why there may be resistance to evaluation. Scientific and academic institutions have not always approached research ethically and equitably.

- Some research has targeted Black, Indigenous and People of Color as research subjects or models, without considering the wellbeing or harm the study could cause.

- The findings of research have been used to incorrectly declare the superiority of Caucasians, and the results have caused significant and lasting damage to communities.

- Despite the progress the field of research ethics has made, the wide variety of research failures have, rightfully, led to the distrust of research by many communities, races, and ethnicities.

- Ensure the safety and security of the community. Your evaluation should consider the distrust of research by clearly defining the ethics and ethical processes your evaluation will take.

- Actively developing relationships will build the trust of the community you are working with.

Step 1: Do a Community Assessment

The first step in designing your evaluation is a community assessment. The community assessment phase is completed in three segments:

- Get organized

- Gather information

- Assemble, interpret, and act on your findings

- This phase includes outreach, background research, networking, reflecting on the evaluation and program goals, and formulating evaluation questions.

- Conduct a stakeholder analysis to identify individuals or groups who are particularly proactive or involved in the community. Find out why they are involved, what is going well in their community, and what they would like to see improved.

Cultural Humility and Culturally Responsive Evaluation

- An evaluator should strive for cultural humility, so that they can approach evaluations evaluation from a place of humanity to understand the lived experiences of the cultures you are working with.

- More information on Cultural Humility

Cultural Humility in Evaluation

Adapted from the American Evaluation Association Core Concepts

- Culture is central to economic, political, and social systems as well as individual identity. Thus, all evaluation reflects culturally influenced norms, values, and ways of knowing—making cultural responsiveness integral to ethical, high-quality evaluation.

- Given the diversity of cultures within the United States, cultural humility is fluid. An evaluator who is well prepared to work with a particular community is not necessarily responsive to another.

- Cultural humility in evaluation requires that evaluators maintain a high degree of self-awareness and self-examination to better understand how their own backgrounds and other life experiences serve as assets or limitations in the conduct of an evaluation.

- Culture has implications for all phases of evaluation—including staffing, development, and implementation of evaluation efforts as well as communicating and using evaluation results.

Stakeholder Analysis

- Use the Positive Deviance (PD) approach to identify early adopters.

- Please note this process is time and labor intensive and requires a skilled facilitator comfortable with ambiguity and uncertainty. This process may not be feasible within the time constraints of the typical NNLM-funded project.

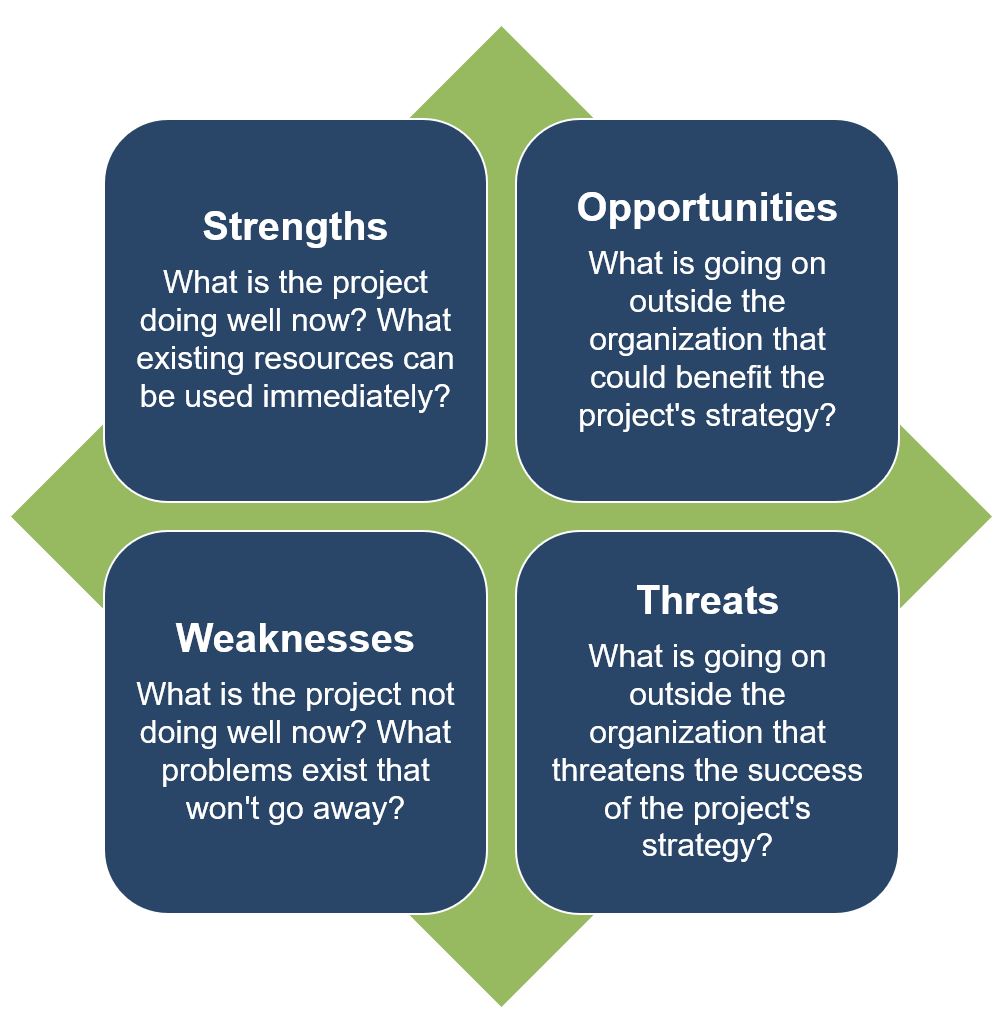

SWOT Analysis

- As part of the stakeholder analysis, conduct a SWOT Analysis for the program to identify what you and your team already know.

- A SWOT analysis is typically used to assess an organization or program's internal strengths and weaknesses and the opportunities and threats to the organization or program in the external target community.

- More information on SWOT Analysis

- Example SWOT Analysis for program working with an underrepresented community

- This phase includes gathering data from different external and internal sources to inform the development of your program and evaluation plan.

Listening Sessions

- Listening Sessions. Community listening sessions are semi-structured group gatherings that prompt discussion on a wide variety of topics.

- Listening sessions can begin an ongoing dialogue between the evaluation team, program team and members of the community the program works with.

- Facilitators of the listening sessions can use a facilitation guide to drive discussion, with key questions and topics to discuss.

- Participants in the listening session should be allowed the space and time to freely discuss topics without judgement or fear of retribution. An important role of the facilitator is to monitor group dynamics, to ensure that all individuals who have thoughts are heard, and to moderate any unsafe situations.

- Analysis of qualitative methods, including listening sessions, should include member checking, whereby a member of the participants reviews the interpretations and results of the data collector, to ensure that the data collector does not negatively influence the findings or miss the priorities and voices of the listening session participants.

Existing Data Sources

- Existing data, where available, can be a helpful resource for developing your evaluation plan.

- The following data sources CANNOT be used to report against program outcomes, but they may be helpful during a needs assessment or program design phase in understanding the context in which the program will be placed:

- NNLM Community Engagement Resources include story maps, detailing information on selected communities to help programs make informed decision that can better serve their communities.

- The American Community Survey (ACS) is an annual survey of communities, that collects economic and social data. It collects some information at the sub-county level

- The County Health Rankings provide an easy-to-understand health profile of counties in the United States

- Healthcare Cost and Utilization Project provides hospital health information at county and sub-county levels. There are a variety of already created tables, but there is the option to make your own table based on information needs

- The City Health Dashboard provides specific health metrics and overviews of cities in the United States.

- This phase includes processing the information gathered into understandable takeaways that can be used for the program and the evaluation.

- When working on community assessments as part of a larger evaluation to advance racial equity and/or equality, the results of the assessment can help to determine the potential benefits for and burdens of the program. After assembling the findings, check for ways that the program will harm or help the community you are working with.

- Defining concrete ways that the program will advance equity or equality clarifies how your program approaches issues of racism.

- The interpretation and representation of findings should be completed collaboratively with members of the community you are working with, so that decision-making authority and engagement are not siloed to only program and evaluation staff.

- Reporting the findings and interpretations from the community assessment back to the community you are working with ensures transparency and accountability from the very start of your evaluation.

Community Assessment Research Questions:

- Which populations are affected by the program activities and outcomes? Which are affected disproportionately by the program activities and outcomes?

- What are the potential positive impacts of program activities?

- What are the potential negative impacts of program activities?

- What actions can be taken to reduce the negative impacts and enhance the positive impacts of the program?

- Do the requirements of participating in the evaluation change any of the answers to the above questions?

Step 2: Make a Logic Model

The second step in designing your evaluation is to make a logic model. The logic model is a helpful tool for planning a program, implementing a program, monitoring the progress of a program, and evaluating the success of a program.

Make a Logic Model

- Consider how the program's logic model will assist in determining what is, or is not, working in the program's design to achieve the desired results.

- Be open to new and alternative patterns in your logic model. Have stakeholders and community members review your logic model, paying particular care to how change occurs across the logic model. Listen to voices who can say whether the strategies are beneficial, and whether strategies could be successful.

- When using available evidence about how change happens, consider who the study included. Evidence that focuses on one race or ethnicity may not apply to another race or ethnicity, and evidence may not capture the complete diversity of experiences within race or ethnicity.

- For cultures that face oppression and discrimination, be careful of biases that interpret program participants as responsible for issues in their community (victim blaming), and not structural or systemic explanations for the issues.

- More information on Logic Models

- Example Logic Model for program working with underrepresented population

Tearless Logic Model

- To facilitate the creation of the logic model, community-based organizations can consider using the Tearless Logic Model process.

- The Tearless Logic Model uses a series of questions to assist non-evaluators in completing the components of the logic model. Questions used in the Tearless Logic Model

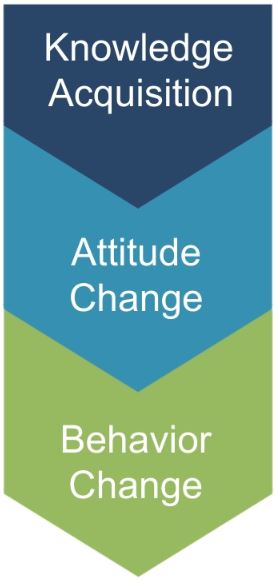

Knowledge, Attitudes, & Behaviors

- Programming should consider improvement in the knowledge-attitude-behavior continuum among program participants as a measure of success. The process of influencing health behavior through information, followed by attitude changes and subsequent behavior change, should be documented in the logic model.

- Focusing on behavior change is more likely to require Institutional Review Board (IRB) approval.

- Recognize that different lived experiences lead to different judgments about how change occurs. To help ensure that the logic model you design is reflective of the experiences of specific race/ethnic experiences, you may want to utilize evidence to support the changes described.

- How individuals process and act on health information can vary depending on the culture of the individual. Use this guide to develop the understanding and adapting health promotion in a culturally responsive way.

- Knowledge Acquisition:

- What is the program trying to teach or show program participants?

- What understanding would a program participant gain over the course of the program? Often knowledge acquisition occurs as a short-term outcome of the program.

- Be sure to examine not only what is learned, but where, when, and how program participants will learn it.

- Attitude Change:

- What mindsets or beliefs are the program seeking to build or change? Be sure to consider cultural differences in attitudes in the community you are working with.

- Make sure that the attitudes you are seeking to change are not rooted in a white supremacy model and are consistent with cultural practices and beliefs.

- To what extent do participants agree with statements related to the new material presented?

- Behavior Change:

- Are the actions of program participants different than what would have been expected without the program?

- It can be important to examine how power structures could alter or change the predicted outcomes.

- If an outcome is targeting a change in behavior, but power structures prohibit the behavior change, it would be important to note the influences of the power structures to achieving the goals of the program. See the contextual factors section for more information.

- Example: In a program seeks to present health information in a series of seminars, and attendance in the seminars is low, it could be caused by disinterest or it could be caused by limited transportation options for the community.

- Note that most NNLM projects focus on the dissemination of health information/health education and often do not take place over a long enough period of time to observe behavior change.

- As such, examining/measuring behavior change may be out of the scope of the NNLM-funded project unless the projects runs for multiple cycles over an extended period of time.

Contextual Factors & Assumptions

- Contextual factors are the influences that underlie your logic model and could act on or change the model described.

- By noting the contextual factors, the program can plan for ways to reduce external factors that could negatively alter the desired outcomes.

- Clarifying assumptions allows for evaluators to reflect on their influence, biases, and connections to the communities in which they work. By making assumptions explicit, you can identify and discuss the assumptions made, allowing for open dialogue and review.

- Assumptions could also underlie how you expect certain groups behave or act. It is important to define, discuss, check, and correct these assumptions before deciding evaluation and program design.

- Example: If a program is seeking to improve a low-resource community’s health behaviors, one might assume that currently individuals have no knowledge of health information or that individuals currently do not have access to health information.

Step 3: Develop Indicators for Your Logic Model

The third step in designing your evaluation is to select measurable indicators for outcomes in your logic model.

Measurable Indicators

- Consider whether your outcomes are short-term, intermediate, or long-term

- Most NNLM projects are of short duration and should focus only on short-term or intermediate outcomes.

- It may be necessary to use more than one indicator to cover all elements of a single outcome.

- How to develop measurable indicators

- Example Measurable Indicators for a program working with an underrepresented population

General Guidance

- When developing indicators, seek to accurately capture the experiences of the people that you are working with. Consider the applicability of the indicators, and the way biases, assumptions, and discrimination may alter how they reflect reality.

- Indicators examining changes from programs working with specific races or ethnicities should consider that an individual’s perception of race can change how they report on specific indicators.

- When developing indicators, try to avoid wording indicators in a way that prompts implicit biases.

- Think through if there are multiple ways to measure the indicator, and avoid indicators that require people to rate or describe other people, including their intelligence or abilities.

- Use lessons learned from the community assessment to make indicators respectful and mindful of the dynamics of race and ethnicity.

- Standard assessments of mental or physiological state may not accurately reflect the experiences of Black, Indigenous and People of Color. Do not assume cross-cultural relevance of indicators, without checking the research or discussing your indicators with the community you are working with.

- Programs seeking to cause social change should consider that changes to norms, structures, and systems takes considerable time. Consider how to measure incremental change, capturing a step towards a more wide-spread outcome.

Rockefeller Social Change Indicators

- The Rockefeller Foundation suggests measuring indicators of different social change outcomes. The dimensions listed below are the ways in which the indicator could be operationally defined or measured, and included in your evaluation plans.

| Social Change Indicator | Dimension |

|---|---|

| Leadership |

|

| Degree and Equity of Participation |

|

| Information Equity |

|

| Collective Self Efficacy |

|

| Sense of Ownership |

|

| Social Cohesion |

|

| Social Norms |

|

Demographic Data

- Collect data on demographics as part of your survey process. It is important to understand your participants' backgrounds and how that may affect their engagement with your program.

- During analysis, compare differences in backgrounds against outcome results. If there are gaps identified in reaching certain populations, consider adjustments to program implementation to serve all participants equitably.

- More information on collecting demographic data

Step 4: Create an Evaluation Plan

The fourth step in designing your evaluation is to create the evaluation plan. This includes:

- Defining evaluation questions

- Developing the evaluation design

- Conducting an ethical review

- Evaluation questions help define what to measure and provide clarity and direction to the project.

- Process questions relate to the implementation of the program and ask questions about the input, activities, and outputs columns of the logic model.

- Example Process Evaluation Questions for a program working with an underrepresented population

- Outcome questions relate to the effects of the program and relate to the short, intermediate, and long-term columns of the logic model.

- Example Outcome Evaluation Questions for a program working with an underrepresented population

- Culturally responsive evaluation questions value a diversity of perspectives. Inclusion of a diversity of perspectives in your evaluation questions help to drive your evaluation towards a fair assessment of the program.

- Try to anticipate how the answers to your questions will be utilized and interpreted, and whether the answers to your questions will best reflect the lives of the participants in your program. Avoid phrasing questions in a way that compares groups to a single cultural standard.

- Since culture is responsible for interpreting what is “normal,” a question that seeks to measure and normalize a pattern or behavior should include the racial and/or ethnic perspectives of your program participants.

- Evaluation design influences the validity of the results.

- Most NNLM grant projects will use a non-experimental or quasi-experimental design.

- Quasi-experimental evaluations include surveys of a comparison group - individuals not participating in the program but with similar characteristics to the participants - to isolate the impact of the program from other external factors that may change attitudes or behaviors.

- Non-experimental evaluations only survey program participants.

- If comparison groups are part of your quasi-experimental design, use a 'do no harm' approach that makes the program available to those in the comparison group after the evaluation period has ended.

- Our implicit biases can change how we design evaluations because they influence our fundamental beliefs of society and what is of value. Examining our internalized biases can help clarify evaluation goals, indicators of change, and put into sharper focus what we perceive to be the dominance or inferiority of the communities we are working with.

- More information on Evaluation Design

Participatory Evaluation Approach

- The approach or framework of an evaluation can help to clarify the process and design. Using an approach grounds your evaluation in underlying principles and theories, which can be used as an internal check and guide for decision making.

- Participatory evaluation shifts the typical power dynamics of evaluations so that program participants have a stake in decision making and planning processes. Participatory approaches should be used in all NEO-member programs.

- Participatory approaches focus on involvement of program participants in all stages of planning and decision making, including the evaluation.

Ethical Considerations

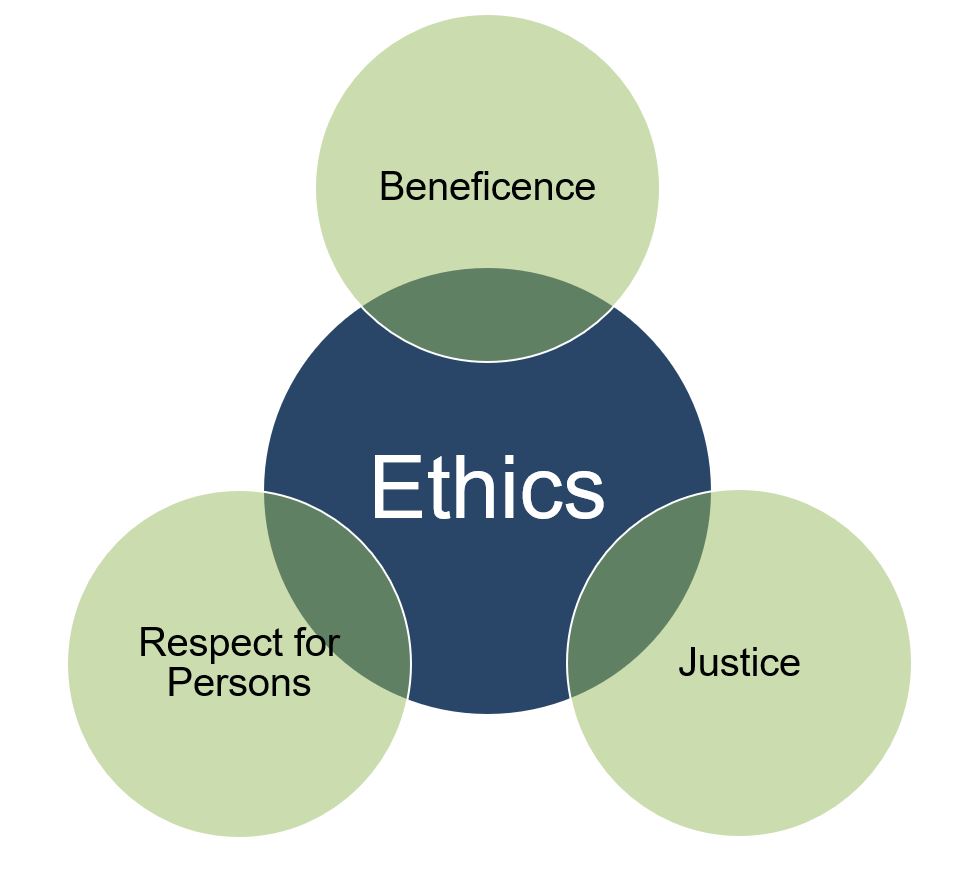

Consider the Belmont Report principles to examine underrepresented populations ethical considerations:

- Respect for Persons:

- When evaluating programs with participants who are facing racism, discrimination, or oppression, consider the effect that power can have on a person’s ability to make decision for themselves.

- If a person does not have relative power or feels as though they do not have relative power, they can be coerced into making decisions that they would not have otherwise made for themselves.

- When involving community members in the evaluation or obtaining consent for your evaluation, try to minimize the pressures individuals feel to act one way or another.

- Allowing anonymous decision making and feedback, when possible, can reduce the pressures individuals feel to behave in one way or another.

- Beneficence

- How will your evaluation balance the burden that participants take to complete the evaluation, with the benefits of completing the evaluation? In the process of completing the evaluation, are you asking your participants to do things or use resources that they might not have easy access to?

- Black, Indigenous and People of Color have felt a disproportionate burden of research; they are more commonly researched than other populations.

- The more frequent research leads to research fatigue, where a population, community, or individual tires of engaging in research. Research fatigue can make it challenging to participate in evaluation or research, meaning that the burden of your evaluation is greater.

- To better balance the burdens, consider how to increase the benefits individuals receive from participating in the evaluation. Benefits can include improved programming, or gifts or stipends for participating in the evaluation.

- Justice

- Consider how your evaluation would exacerbate or improve the distribution of benefits.

- In a society with racism, discrimination and oppression, justice can be difficult to assess as it is entrenched in the systems, structures, and norms of the society.

- Try to assess how the program affects different groups of people withing your program.

- Consider that there may be groups that are less visible in a community than others, including those who do not speak English, older adults, disabled individuals, low-income households, and medically vulnerable people.

Understanding how the Belmont report applies to racial equity can help you to analyze how to improve the ethical standings and considerations your program takes and can improve the accessibility of the program.

Trauma-Informed Evaluation

- Asking someone about trauma is asking that person to recall potentially difficult events from their past.

- If absolutely necessary for the evaluation to ask questions about potentially traumatic events, incorporate a trauma-informed approach to collect data in a sensitive way.

- Amherst H. Wilder Foundation Fact Sheet on Trauma-Informed Evaluation

- More information on Trauma-Informed Evaluation

- It may be beneficial to complete a human-subjects research training, online or in person, if you are working with vulnerable populations or have more questions about ethical review or the ethical review process.

- The Institutional Review Board (IRB) is an administrative body established to protect the rights and welfare of human research subjects recruited to participate in research activities.

- The IRB is charged with the responsibility of reviewing all research, prior to its initiation (whether funded or not), involving human participants.

- The IRB is concerned with protecting the welfare, rights, and privacy of human subjects.

- The IRB has authority to approve, disapprove, monitor, and require modifications in all research activities that fall within its jurisdiction as specified by both the federal regulations and institutional policy.

- Click here for more information on the IRB, including contact information for your local IRB

- Depending on the nature of the evaluation, the IRB may exempt a program from approval, but an initial review by the Board is recommended for all programs.

- If you are working in a community or district with its own public health department, first check with the local public health department before consulting the state-level public health department.

- If using health records from health care facilities, review patient privacy laws in your area.

- Most information from care facilities will be in electronic records, which have various safeguards to prevent unethical use.

- The use and transfer of protected health information (PHI) is regulated through multiple government policies, including the Health Information Technology for Economic and Clinical Health (HITECH) Act and the Health Insurance Portability and Accountability Act (HIPAA).

Step 5: Collect Data, Analyze, and Act

The fifth step in designing your evaluation is to implement the evaluation - Collect Data, Analyze, and Act! As part of an evaluation, you should:

- Collect data before, during, and after your program has completed

- Complete analysis after the completion of data collection

- Act upon your analysis by sharing evaluation results with stakeholders, and if needed, adapt future iterations of your program to address gaps identified through the evaluation

- Ensure the privacy and confidentiality of all participants.

- Select a sampling strategy that will allow for reporting on program outcomes.

- Consider whether quantitative and/or qualitative data collection methods are appropriate for reporting on program outcomes.

Privacy/Confidentiality

- Privacy and confidentiality of collected data should be ensured.

- Seek consent from all participants, or the guardians of participants, in your evaluation.

- Consider how you will secure data collected to protect sensitive information.

- Data stored electronically should, at minimum, be password-protected.

- In systems of inequality, confidentiality is important to ensure because the data collected could be used in a harmful manner to perpetuate stigmas against individuals or groups of people.

The CENTERED Project suggests evaluators review the questions in the table below about confidentiality for all participants in data collection activities:

Who they are? Do I need their name and other personal identifiers, or can I substitute a unique code and still satisfy the program’s needs? What is their role in the program and/or the evaluation? Client? Staff? Why is this data/information being requested? Why is it important for this person to participate? Who else will have access to the data? How will the data they provide be used? What are the consequences if they chose not to allow use of their data? What will happen if they decide after agreeing to allow their data to be used, to change their mind? What impacts will this have on them? On the program? What types of data will be collected? On what topics? What kinds of things would have to be reported to authorities (e.g., child abuse) if they came out during data collection? What are the risks for those clients/staff members who agree to participate? What are their benefits? What incentives are you providing? How much time will this take? What will happen to the data/information once the evaluation is completed?

Sampling Strategies

- Sampling is the way that individuals are selected from a larger group to complete data collection activities.

- Generally, sampling strategies can be divided into non-probability sampling and probability (random) sampling.

- Sample size calculators are useful for determining the number of data points that are needed to detect a statistically significant difference.

- More information on sampling strategies

Choosing a Sampling Strategy

- It can be helpful to have a community representative, who moderates and works with the evaluation team, that is able to assess and communicate the community’s history and concerns when choosing a sampling strategy.

- Care should be given to ensure that the individuals located through sampling are safe and secure, and that power structures are recognized.

- Is a sample needed or is your program population small enough that it is feasible to survey everyone?

- For a program with 20 participants, for example, the total number of participants is small enough that it is likely more work to create a truly representative sample.

- However, if a program is operating across an entire region, collecting data from a sample of students will likely save time and money and produce similar results as surveying all members of the region.

- If a sample will be selected, will it be necessary to conduct probability (statistical) sampling?

- If it is not feasible to compile a list of sampling units, random selection (required for statistical samples) will not be possible.

- In addition, if you do not intend to generalize to the full participant population (i.e. you won't make conclusions about all participants based on data from a sample of participants), probability sampling is not necessary. Non-probability samples may provide enough information and are less cumbersome to select.

Non-probability Sampling

| Sampling strategy | Example use in programming working with an underrepresented population |

|---|---|

| Convenience sample | Select individuals visiting the library to participate in a program. |

| Quota sample | If you want to survey individuals who have used a new technology in the library, and you know from the community assessment that 40% of the community come from low-income families, you would ensure 40% of your survey respondents were technology users from low-income families. |

| Volunteer or self-selected sample | Ask all individuals who attended a seminar to complete a survey if they feel comfortable. |

Probability Sampling

| Sampling strategy | Example use in programming working with an underrepresented population |

|---|---|

| Simple random sample | An evaluator wants to say that the program changes an outcome in an entire community. After calculating a sample size, a random number table is used to select households to survey. |

| Systematic random sample | From a list of all visitors to a new technology, an evaluator interviews every 5th visitor. |

| Stratified sample | Begin by assigning participant groups according to a common trait – sex, socioeconomic status, etc. From there, a random group of participants from each strata (group) would be selected to participate in the study. This method can useful when analyzing the intersectionality of outcomes. |

| Cluster sample | This method can be useful if looking at outcomes across multiple locations, such as libraries or neighborhoods. First, the evaluator would generate clusters that are roughly equal, and would then sample from each cluster. |

Data Collection Methods

- Data that reflect cultural humility can accurately collect information on the real-life experiences of the program participants.

- Using pre-designed data collection instruments can save time and provide results that are more generalizable to the population. However, pre-designed instruments may not work the same way and measure the same things across different populations - a design element named cross-cultural equivalence.

- To determine cross-cultural equivalence, find studies using and validating the data collection instruments for cross-cultural equivalence, or studies that have the same or similar population context as your evaluation.

- Field testing all data collection instruments- that is practicing using the instrument in a real-life setting, and altering the instrument based on feedback and preliminary analysis- can help to improve the information collected.

| Data collection method | Example use in programming working with an underrepresented population |

|---|---|

| Questionnaire/ Survey | Questionnaires or surveys are a good solution for collecting data from a large group of participants, say an entire community, or from many different stakeholders, like program participants who are partaking in different program components. |

| Knowledge assessment | Knowledge assessments can be useful for a program that wants to understand if knowledge of a promoted resource or practice has changed over time. |

| Focus Group | Focus groups are a good way of collecting data from any stakeholder group that have shared a common role within the program. While knowledge assessments are critical for understanding if the program content has been understood, focus groups can be beneficial in helping program staff understand if the way in which the program has been implemented was effective, efficient, and ultimately sustainable. |

| Observation | Observations can assist evaluators in understanding how effective their program is working overtime. If a program is promoting the use of a new or different technology, it can be helpful to conduct observations on individuals who engage the technology to see how they use it. |

| Interview | Interviews can serve to solicit specific information from individual stakeholders. A program manager may be interviewed to help uncover difficulties with program implementation. |

Quantitative Analysis

- Quantitative data are information gathered in numeric form.

- Analysis of quantitative data requires statistical methods.

- Results are typically summarized in graphs, tables, or charts.

- When working with an underrepresented population, the goal of an analysis of data related to a program working with underrepresented populations is to maintain the experiences and voices of the program participants.

- Even the most rigorous of data analyses can be interpreted differently by different evaluation practitioners.

- Misinterpretation of data can easily occur when cultural standards are not fully understood.

- Example: A community may take more time to think and act on decisions, but an outside evaluator may say that it is a sign of disinterest in a program.

- It is important to be upfront and transparent about your own biases and assumptions during analysis and interpretation.

- Maintaining a clear chain of thought, and review by program participants can help to limit the effects of personal biases and assumptions.

- Over-analyzing the data, where an evaluator completes multiple analytic techniques until they find a result that matches their expectations, can lead to personal biases being represented in the quantitative data.

- More information on quantitative analysis.

Qualitative Analysis

- Qualitative data are information gathered in non-numeric form, usually in text or narrative form.

- Analysis of qualitative data relies heavily on interpretation.

- Qualitative data analysis can often answer the 'why' or 'how' of evaluation questions.

- Qualitative analysis is inherently more subject to the influences of the participants and the evaluator.

- Reflexivity, the self-awareness of the degree of influence an evaluator exerts over findings, is particularly important for evaluating programs that take place within power structures, where racism can consciously or subconsciously influence an evaluator’s findings.

- Member checking, where a participant in the qualitative data collection process reviews notes and findings, helps to ensure conclusions are fair representations of the situation.

- More information on qualitative analysis.

Ways to practice reflexivity:

- Keeping a record of what is influencing evaluation design choices.

- Participatory evaluation partnering with community members who will question your assumptions.

- Disclosing motivations for completing the evaluation.

- Avoiding dominating and leading interviews and focus groups - allowing the participants to guide the process.

- Characterization of conditions where data were produced.

- Member checking - where another person from the population you are assessing checks your analysis to avoid misinterpretations. A participant in the qualitative data collection would be an ideal member to check your interpretation.

- Organizing auditable documents and including steps taken to ensure reflexivity in all reports and presentations.

- Share information gathered in your evaluation with stakeholders to ensure they understand your program successes and challenges.

- Align stakeholders around the program and allow for planning for future iterations or versions of the program.

- Evaluations can be summarized as evaluation reports, Power Point Presentations, dashboards, static infographics, and/or 2-page briefs depending on the needs of stakeholders.

Meeting Stakeholder Needs

- Evaluations that are working to reduce disparities between one racial/ethnic group and another group or dominant cultural norms, need to be transparent and fully detail their process from start to finish.

- All outcomes found should be placed in a larger context, including the influence of structural and systemic issues like government policies, resource allocation, intergenerational wealth and education, and racism.

- Creation and review of findings with community members and stakeholders can help ensure that information is presented in a respectful way.

- Responses to the outcomes found in the evaluation should include the direct involvement and decision making of multiple stakeholder groups and community members.

- Be open to other interpretations or responses to your evaluation findings.

- If a program is renewed for subsequent program cycles, then using the findings of the evaluation can improve how responsive the program is to the needs of program participants.

- Disseminating information from the evaluation back to the community that you worked with can help ensure accountability and clarify subsequent actions.

- It is a sign of respect to include the community that you worked with, and an all too often forgotten piece of evaluation.

- One method of sharing findings is to hold a seminar with community members where you go over findings and hand out paper copies of the evaluation report to community members.

Example Evaluation Plan

The East Sohurst Library, an NNLM member, has designed a program to improve community members' access to health information resources and technology. The program, named HealthTech, will upgrade computer hardware in the library, and train librarians to better assist community members to access information. The East Sohurst Library serves a diverse community, located in an area where 53% of residents identify as Black or African American, 15% as Hispanic or Latino/a/x, and 6% as Asian or Asian American.

HealthTech will upgrade the three existing computers at the East Sohurst Library and add three more computers and associated hardware. Additionally, HealthTech will use the grant funding to allow three library scientists to complete the NNLM course “Serving Diverse Communities,” so that they are better equipped to access culturally relevant health information and resources. At the end of the course, the three librarians will hold a seminar series for community members to share their newly acquired knowledge on health information resources. The series will include sessions for beginner, intermediate, and advanced skill levels, to include community members of all levels of experience.

The East Sohurst Library is currently applying for a grant through NNLM and is designing their evaluation plan as part of their grant application.

From the start of the evaluation, HealthTech decided to utilize participatory evaluation methods. The program itself was designed prior to receiving community input, but after learning about participatory methods, the HealthTech evaluation team decided to begin using them in the community assessment and evaluation.

SWOT Analysis

HealthTech identified three community leaders in East Sohurst who wanted to help lead the program. The SWOT analysis below, as well as a stakeholder analysis, was competed by HealthTech staff and the community leaders. From then on, all important decisions were decided in collaboration with the community leaders, and once-monthly meetings were established for consistent communication with the three community leaders.

The community assessment showed that significant health disparities exist between East Sohurst, and neighboring West Sohurst. Most residents in West Sohurst identify as White or Caucasian. Persistent discrimination and racism toward the residents of East Sohurst has limited their economic mobility. Additionally, 15% of households in East Sohurst have income of less than $25,000 per year, and many households report limited access to technology and health information at home.

Strengths

- Updates technology that library patrons use frequently to new standards

- Improves knowledge of librarians to be more responsive to community needs

- Library patrons are more easily able to access health information

- Knowledge gained by library staff is disseminated to library patrons

- Tiered training model makes disseminated information relevant for multiple experience levels

- NNLM resources become more widely used

- Health information becomes more widely known in East Sohurst

- Participatory evaluation methods

Opportunities

- Could increase knowledge of health information for both library patrons and librarians

- Could help to reduce health information/knowledge disparities between East Sohurst and West Sohurst

- Could improve health behaviors, and health seeking in East Sohurst

- Could allow for opportunities to partner with the community-at-large

- Could allow for leadership opportunities for community members

- Could showcase the library as a community leader

- Could build connections and bridges between the community and the library

Weaknesses

- East Sohurst community members must visit the library to utilize new technology

- Difficult to identify appropriate skill levels for tiered seminar series

- Health information coverage limited to library patrons

- Only 3 librarians attend NNLM training; other librarians and staff members do not

- Limited time and budget to complete activities and evaluation

- Program decided on objectives before seeking community input

Threats

- Technology could not function or break

- Lack of participation in seminar series

- Would be easy to be caught in urgent timeline and forgo community input in the program and evaluation

- Biases of HealthTech staff toward residents of East Sohurst could influence how the program is completed, and who makes decisions regarding the program

- Undetermined compensation methods for community leaders’ involvement could perpetuate power structures and devalue community input into HealthTech

- Racism and discrimination can make it difficult for East Sohurst residents to access the same resources as West Sohurst

- Transportation to the library can be challenging for community members

Logic Model

Developing a program logic model assists program staff and other stakeholders in defining the goals of a project and develops a road map for getting to the goals. The logic model is also used during the evaluation process to measure progress toward the program goals.

The HealthTech logic model is below.

Goal: Improve access to technology and health information at the East Sohurst Library

Inputs

What we invest

- Library staff member time for training on new material & liaising with community health partners

- Space/ resources for quarterly meetings

- Supplies for supplemental print health resources (booklets, pamphlets) and community advertising of Workshop Series

Activities

What we do

- Provide access to health workshops for LGBTQIA+ seniors through the library

- Provide supplemental print health resources for seniors to take home and reinforce learning

- Train library staff on sharing health resources with LGBTQIA+ community

Outputs

What can be counted

- LGBTQIA+ seniors registered to attend Workshop Series attend on a regular basis

- Seniors take home supplemental health resources from the library at the completion of sessions

- Librarians receive NNLM Health Resources training

Short-term Outcomes

Why we do it

- Seniors learn about health topics specific to them and learn of resources available to them through the community

- Seniors report gaining new knowledge after each session

- LGBTQIA+ seniors access other health resources available through their local library

- Library staff improve their knowledge of health resources available through the library that benefit LGBTQIA+ community members

Intermediate Outcomes

Why we do it

- Seniors change attitudes related to health matters relevant to them taught through library-based health workshops

- Library staff are seen by seniors as a resource for additional health information

Long-term Outcomes

Why we do it

- Seniors seek out assistance related to their health needs

- Seniors report changed behaviors related to health topics discussed as part of the workshop series

- Providing health assistance to seniors is part of at least one library staff member's official job description

Assumptions

- Local public health experts are open to partnering with the library for the duration of the program

- Seniors can physically visit the library

- Seniors find the material interesting and complete the workshop series

- Library staff members are dedicated to improving their practice

External Factors

- Library is only accessible via public transportation for some seniors (-)

- Library and public health experts are highly motivated to partner on this project (+)

Measurable Indicators

HealthTech reviewed the logic model with community leaders and decided upon a list of indicators that matched their goals. The table below shows some sample indicators.

| Outcome | Indicator |

|---|---|

| Short-term Outcome: New or updated computers are available for East Sohurst Library patrons | Total computers available to library patrons, number of new computers, number of updated computers |

| Intermediate Outcome: Increase in the use of health information resources by East Sohurst Library patrons | Proportion of library patrons that report using one or more health information resources available at East Sohurst Library |

| Long-term Outcome: Library patrons increase health knowledge | Percentage (%) of knowledge questions answered correctly on survey, based on the top community health issues during the community assessmentt |

Evaluation Design & Questions

For their evaluation, the HealthTech team selected a Pre-Posttest design in order to address all outcomes in their logic model. The team considered a pre and post test with follow-up design but decided that at this time the resources are not available to complete a high-quality follow-up stage.

The HealthTech team decided to compare information collected on indicators before the program was implemented to outcomes at the end of the program. In addition to the evaluation plan, they discussed the burdens of completing the evaluation on the community, and the benefits of completing the evaluation. Example process and outcome evaluation questions for the HealthTech program are below.

Process Questions

| Process Questions | Information to Collect | Methods |

|---|---|---|

| Were program activities accomplished? | Did all 3 selected library staff complete the NNLM training in a timely manner? Were all 6 computers and associated hardware set up by the deadline? Were all 3 seminars completed? | Focused staff feedback sessions Observation of seminars |

| Were the program components of quality? | Were the selected library staff able to apply lessons learned in the training? Were newly installed computers able to complete a series of performance and quality checks? Were seminar participants satisfied with the seminars? Did seminars meet objectives? | Observations of seminars Survey of seminar participants Computer quality check Interviews of librarians who completed training and gave seminars |

| How well were program activities implemented? | Were all activities completed on time and within budget? How many individuals attended the seminars? | Focused staff feedback sessions Review of program records |

| Was the target audience reached? | Were attendees at the seminars from East Sohurst? Were the races/ethnicities of the attendants present at the seminars reflective of the community-at-large? | Observations of seminars Survey of seminar participants |

| Did any external factors influence the program delivery, and if so, how? | Did income level or race/ethnicity change who attended the seminars? | Survey of seminar participants |

Outcome Questions

| Outcome Questions | Information to Collect | Methods |

|---|---|---|

| Short-term outcomes | By the end of the program, did library patrons change their use of library technology? By the end of the program, did library staff's knowledge of health information resources and ways to serve East Sohurst change? At the end of the program, did selected library staff believe that their ability to assist library patrons in finding relevant health information had changed? By the end of the program, did library patrons gain new knowledge on how to access health information? | Survey of library patrons Observation of how individuals use computers Survey of seminar participants Interviews of librarians who completed training and gave seminars |

| Intermediate outcomes | Did the number of East Sohurst residents' visits to the library change? Did the number of East Sohurst residents using the new library technology change? Did a greater proportion of library patrons seek assistance from library staff to locate health information? Did observations of library patrons' use of health information resources when visiting the library detect any changes in the way the resources were used? Did the proportion of library staff's interactions with library patrons, that resulted in successfully locating or using health information resources change? | Counts of library patrons Observation of how individuals use computers Survey of library patrons |

| Long-term outcomes | Did library patrons' health knowledge change over time? | Survey of library patrons |